AI won't be allowed to do important things for the simple reason that people in charge of important things won't let themselves get replaced. Only powerless people can be replaced. Engineers are not powerless, and this is relatively easily proven by engineers being the people who design ML algorithms in the first place, so they aren't exactly in danger of "being replaced".

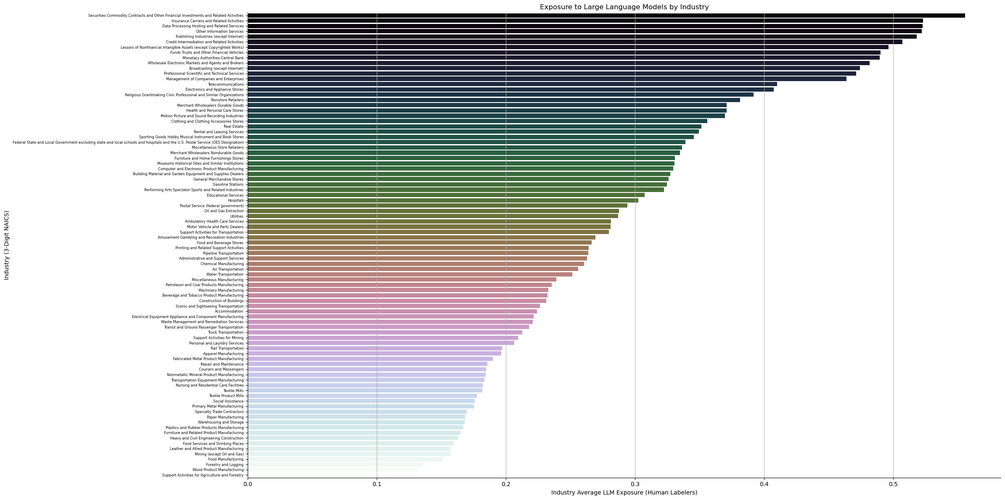

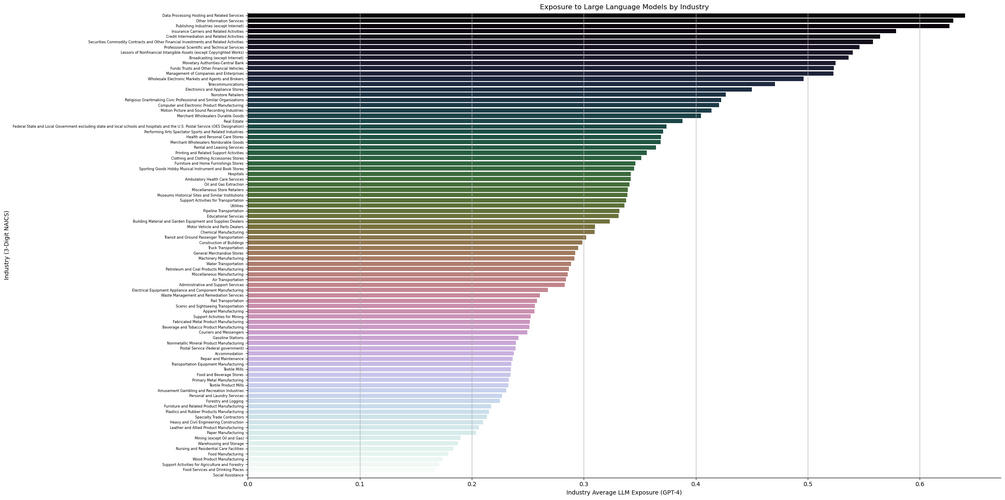

The only conceivable way for AI to replace human engineers is if, somehow, humans are no longer capable of being trained as engineers. Given that AI are on the fast track to eliminate a lot of the less useful degree fields, and re-focus universities on training important things like engineers, they are having the exact opposite effect. The only people being replaced at the moment are paralegals, journalists, and self-published romance novel writers.

These people are being replaced because AI produces just as good work, but approximately "a brazilian" times faster, with the expectation that the work still needs to be checked by an actual lawyer or actual editor. This is because AI is too untrustworthy to be tasked with actually specialized knowledge because it will literally tell you nonsense with a very serious tone, can barely understand simple logical intuition, and it isn't clear how to avoid this in the future. If it's even possible to avoid the issue of AI hallucinations without gutting the neural network entirely.

This is why there will be an AI winter in the coming years, and why some people think that all the stuff that OpenAI has done has now peaked, since we've tapped out all the freebies from the massive gains in computational power, networking/communications, and storage since the 1980's when the first neural networks were developed.

I've also seen people say that AI winter is already here since the focus is all on neural networks to the exclusion of all forms of machine learning and potential AI development, but that's probably because people are hoping AI can solve a looming demographic-economic crisis because no one has kids anymore, and that simply won't happen. If anything it will exacerbate it since the paralegals and journalists being laid off or furloughed won't be able to afford to get married much less raise children when the AI comes for their job.

At the end of the day, the main issue with neural networks and deep/machine learning algorithms seems to be hallucinations: they are simply too untrustworthy to not require babysitting by a trained human. Whether that human is a doctor looking at MRIs (DL), a lawyer looking at legal notices (ML), or an engineer trying to design an airplane (both) is irrelevant. ML algos are very good at producing rapid-fire general statements and standard template C&D letters, though.

Here is a brief paper that goes into DL, which is used for imaging analysis and other "unstructured" datasets, but I suspect there is similar mathematics involved in ML. The issue is that you can have a very stable algorithm that produces very good results and completely ignores anything it wasn't trained on, or you can have a very accurate algorithm that identifies all aspects of an image, but can be thrown off by hidden noise or draw false conclusions and present them to an operator.

The hard part is finding the right trade offs between stability and accuracy in specific fields. This would require AI to become much more common than it is now. Dr. Watson isn't going to do much better than your GP typically, but he might give you more specialist referrals I suppose, and tie up more money into the healthcare system. Give it a few more decades and we might have a better idea of how to shake out something useful beyond "generic terms of service generation" or various boilerplate that GPT-3 does. Then a few more decades after that to get that useful thing into...use.

Suffice to say AI is just having a moment because the engineers discovered bitcoin miners and cheap hard disks are real now. All the maths was done 30-40 years ago though, with very primitive models showing up in the 1990's. It's no big deal to anyone who does specialized work, or is an actual investigative journalist or field reporter like the old VICE stuff, but it might be a big deal to someone who just punches the clock as a paralegal at a medium size law firm.

Once people realize most ML stuff are all essentially toys and that the esoteric mathematicians haven't figured out how to make a PhD golem yet, they will lose interest, the money will dry up, and the AI field will become a backwater. Again. Like particle physics did in 2012, like quantum computing will in a few years, and like the AI field was from around 1993 to 2016.

Your job is more likely to vanish due to economic contraction from demographics than due to economic redistribution from cybernetics.