Quoting a source means that you are acknowledging their ownership of the material, and quoting them is explicitly called out in the law as fair use.

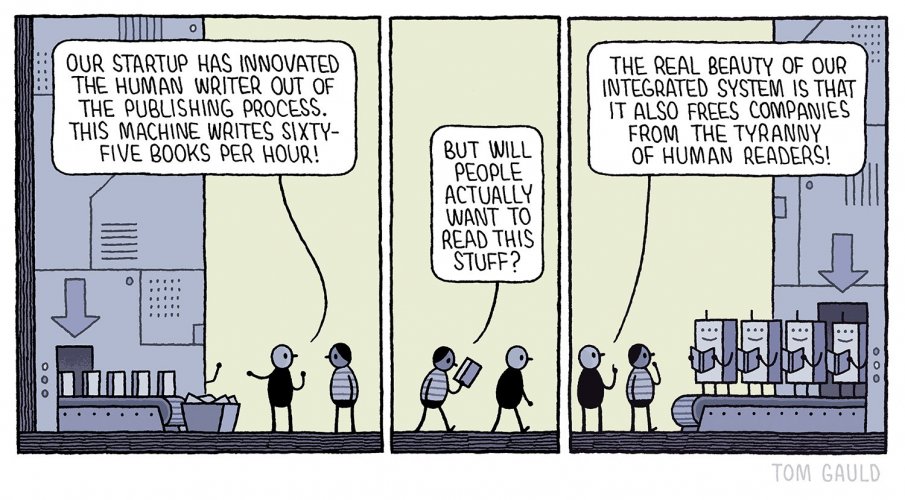

If the material is being used for commercial purposes - as almost any use of an LLM would be - it is far less likely to be found as fair use by a court. The purpose and context of the use is central to the fair use doctrine. For example, use of content for:

- Nonprofit use

- Teaching or education

- Political / social commentary or satire (i.e. discussing the social impact of the protected work in the press)

Would be things that favor a finding of fair use.

The nature of the protected work can be a factor. If you were to write a book about, say, a ship and that book was filled with factual information there could be a case made that those facts, your presentation of them and your expression - are open to "fair use". Even researching, verifying, and publishing those facts was done at considerable expense. But if the book was mostly fiction or a "creative" work, it would be more likely not subject to claims of "fair use".

How the protected work is used - and how much of it - is also a factor. Quoting individual lines or paragraphs from a published work, with attribution, would generally be found as fair use (through he author can make a case for permission or attribution, and can revoke an assumed permission). Using the entire protected work, however, is generally not going to be considered fair use.

And here is where we start running into problems!

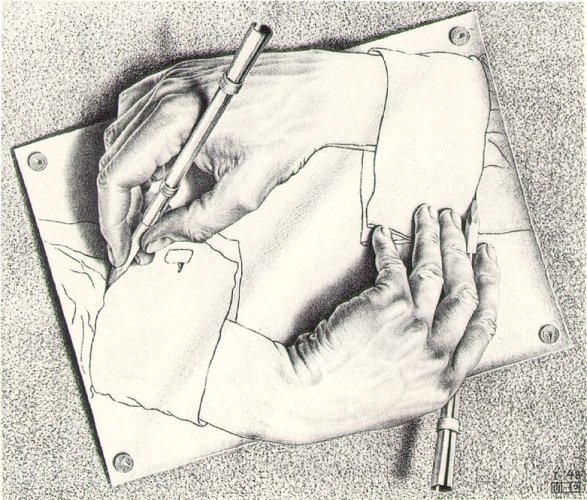

LLMs are trained on corpuses of data. They regurgitate outputs that are product of statistical models of that training. There are good arguments that the LLM is using the complete protected work, and most LLMs are doing so for a commercial purpose without the permission of the copyright holder. And in many cases, they are doing it at the expense of the copyright holder. LLM training software scours the internet for content and downloads it at the expense of the copyright holder, then effectively repackages and resells it as "output".

Let's say an LLM uses one of my posts on the forum as training data, and I know this - like if it quotes or attributes me. Through various means I can suggest or force the LLM owner to remove my content from their training data. Doing so though is very difficult from the LLM owner, and in most cases would mean re-training their LLM from scratch without my content.

That gets expensive fast.

And as far as citing sources, LLMs in general have no special handling for citations or quotes. They have no idea what an APA citation, footnote, link, etc. looks like nor have any context for it. Links are all treated the same. It has no way to handle that the link is some kind of source or attribution in context nor a way to validate that the link is what it says it is. That includes its own output, something I have seen frequently with LLMs. Their citations / sources are Wikipedia-quality (i.e. made up).